Transfer Learning in Reinforcement Learning

10 Dec 2016

I worked on this project with Guillaume Genthial for my course on Decision Making under Uncertainty at Stanford. We were interested in investigating methods to transfer knowledge in reinforcement learning from some source tasks to a target task. The source tasks can differ by the state-actions domains, dynamics (transition functions) and objectives (reward functions). Precisely, we wanted to address the case where the target task is a difficult, real-world problem for which we can’t afford to learn directly in this environment (eg driving a car or a plane), but for which we can design imperfect models and simulations (the source tasks).

We narrowed our project to the framework of Q-learning with global approximation, and to the case where the source tasks’ state-action domain is a subset of the target state-action domain. To transfer knowledge, we first train agents on simple source tasks. Then, we use this to train an agent on a more complicated target task. Our models learn the target Q-function using the source Q-functions as features and some (light) interaction with the environment.

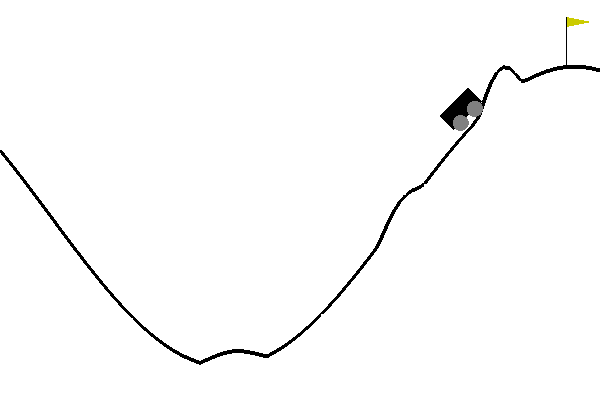

We tested our algorithms against the mountain-car benchmark from OpenAI Gym and observed general improvement in both speed and performance. Our code is available on Github and the full report is here.